TimeArena

👋 Hi, there! This is the project page for our paper: “TIMEARENA: Shaping Efficient Multitasking Language Agents in a Time-Aware Simulation”.

TimeArena Code

Code for running TimeArena and experiments. Coming soon!

TimeArena Paper

Everything you need to know about our work.

Demo videos

Parallel Processing

Serial Processing

Abstract

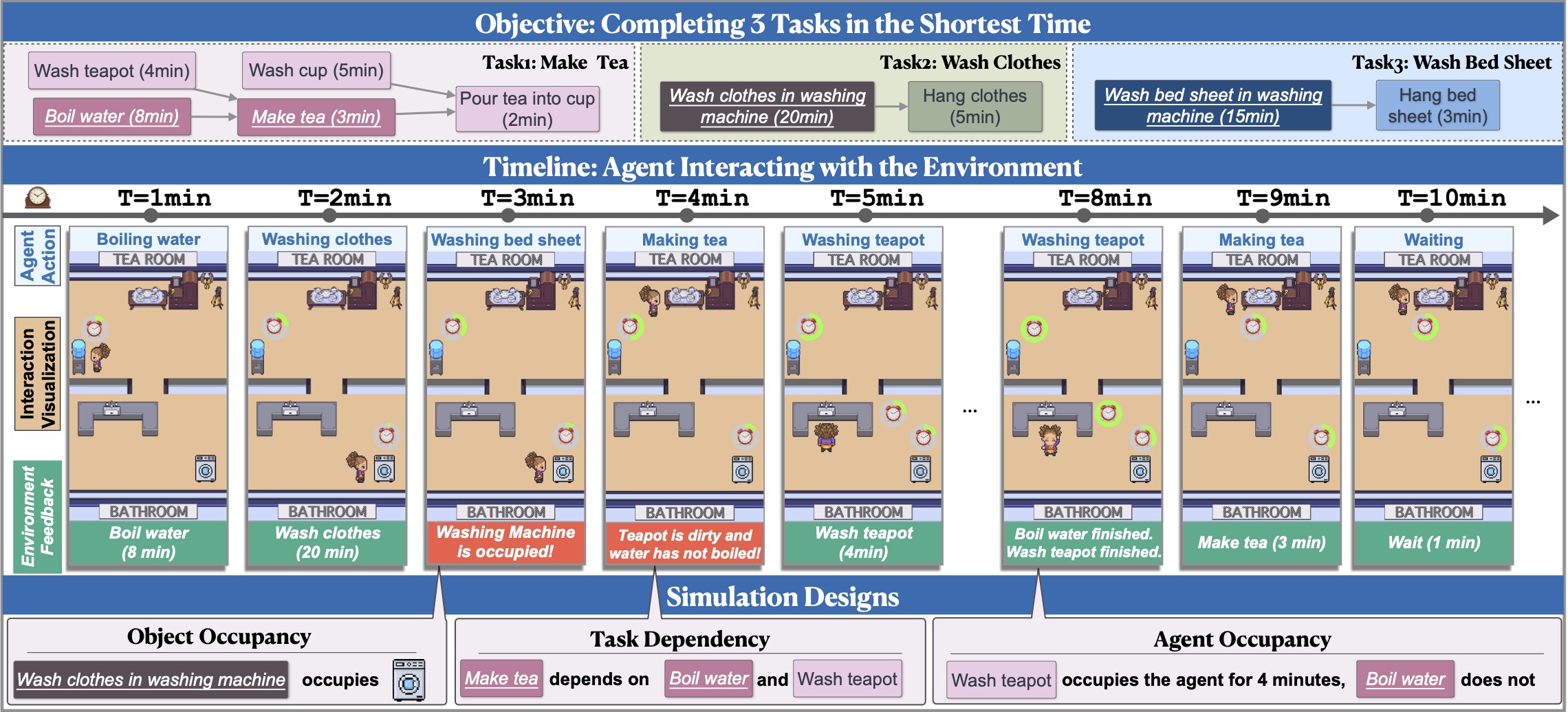

Despite remarkable advancements in emulating human-like behavior through Large Language Models (LLMs), current textual simulations do not adequately address the notion of time. To this end, we introduce TimeArena, a novel textual simulated environment that incorporates complex temporal dynamics and constraints that better reflect real-life planning scenarios. In TimeArena, agents are asked to complete multiple tasks as soon as possible, allowing for parallel processing to save time. We implement the dependency between actions, the time duration for each action, and the occupancy of the agent and the objects in the environment. TimeArena grounds to 30 real-world tasks in cooking, household activities, and laboratory work. We conduct extensive experiments with various state-of-the-art LLMs using TimeArena. Our findings reveal that even the most powerful models, e.g., GPT-4, still lag behind humans in effective multitasking, underscoring the need for enhanced temporal awareness in the development of language agents.

TimeArena

Introduction: A Time-Aware Simulation

Existing textual simulations for language agents overlook the notion of time, including temporal and resource constraints. Our paper, TIMEARENA: Shaping Efficient Multitasking Language Agents in a Time-Aware Simulation, introduces a dynamic and interactive environment that integrates time to enable human-like efficient multitasking.

The Design of TimeArena: Duration, Dependency, and Occupancy

The integration of time requires the agent to consider the following three factors: 1) Time Duration and Dependency: Actions will have durations upon dependencies, requiring agents to strategize and prioritize based on time constraints and task completion progress. 2) Agent Occupancy: Agents will be occupied by certain actions thus they might be unable to perform other actions at the same time. 3) Object Occupancy: Some objects might be occupied for some time, and agents must use available objects in the environment for the tasks.

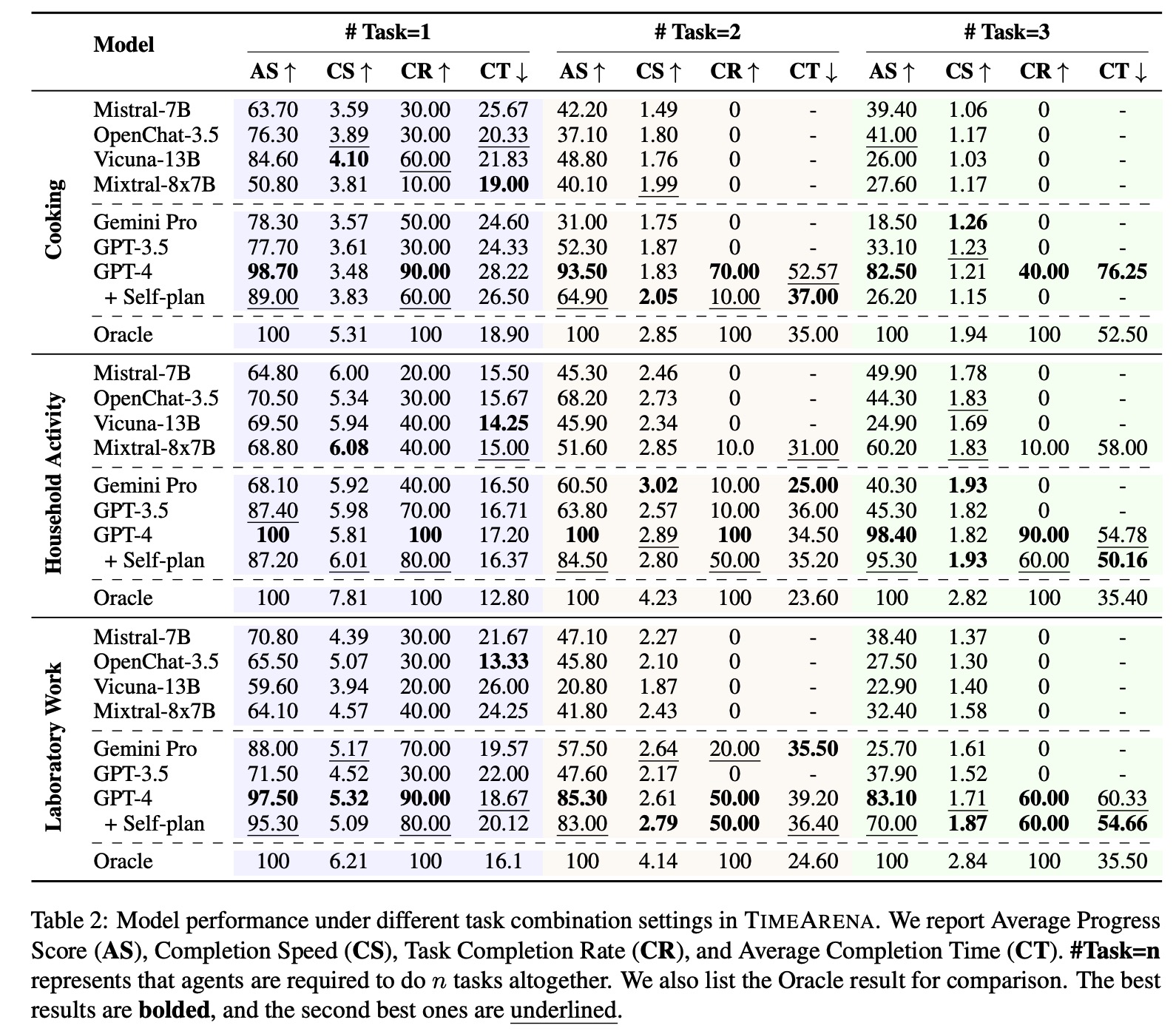

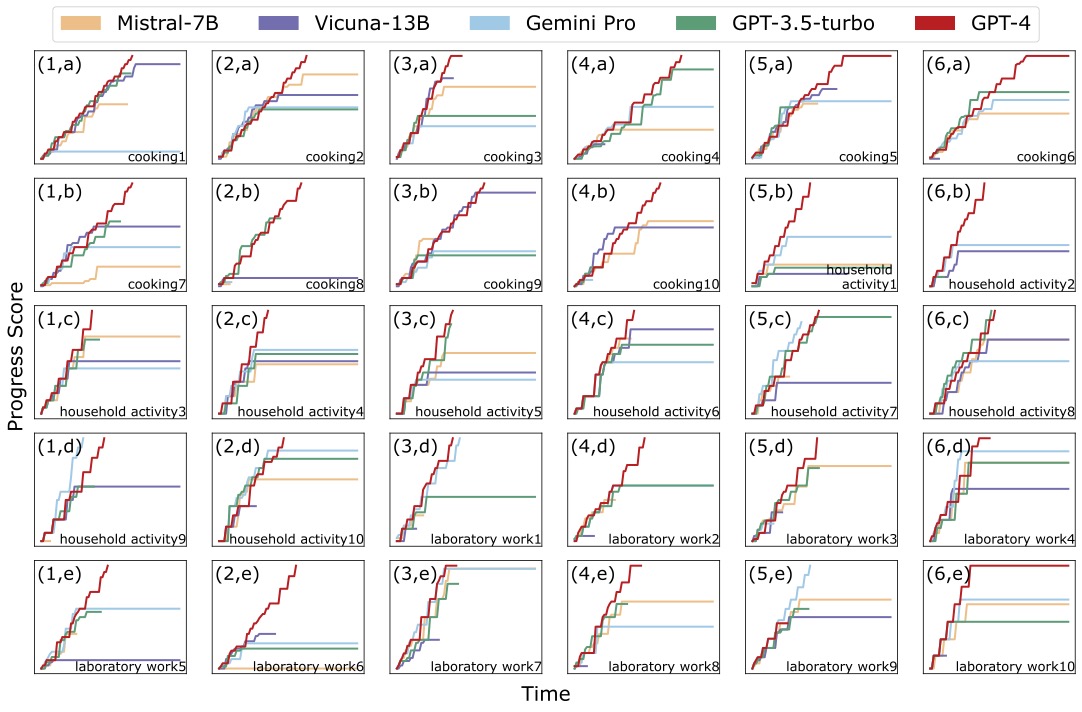

Analyzing Language Agent: Multitasking and Parallel Processing

Efficient multitasking involves handling multiple tasks and parallel processing to save time. In multitasking, most models struggle to complete 2 or 3 tasks, highlighting their limited capabilities. For parallel processing, even GPT-4’s completion time falls short of oracle performance, showing the challenges of parallel processing.

Can language agents master multitasking?

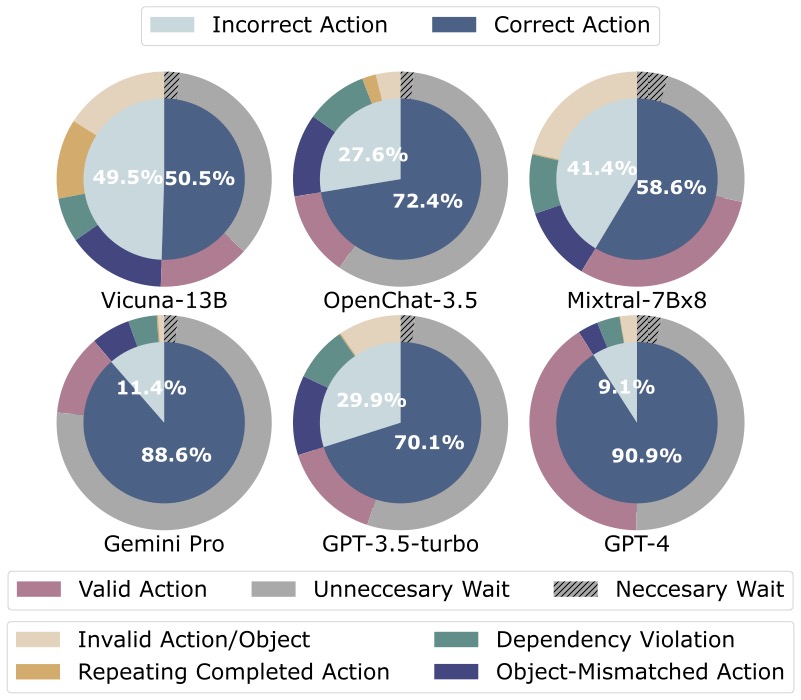

The high proportion of actions that violate dependencies and mismatch objects suggests that language agents face challenges in managing complex action interdependencies during multitasking.

Are language agents aware of parallel processing?

Waiting actions constitute over half of the valid actions performed by different LLMs, and necessary wait only accounts for a small part of it. This indicates a tendency for agents to engage in unnecessary waiting, showing their ignorance of parallel processing.

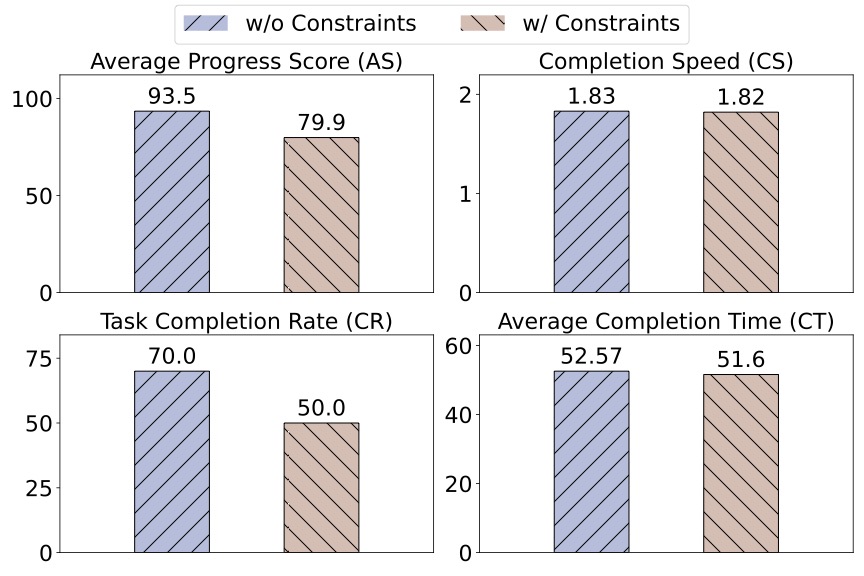

Do resource constraints affect the multitasking of language agents?

Resource constraints do not affect the task completion time or speed, revealing that GPT-4 rarely attempts to process tasks in parallel. However, a noticeable decline in both completion rate and progress score indicates that the constraints prevent the models from better comprehending and decomposing multiple tasks.

Language agents trapped in an infinite loop.

Vicuna, Mistral, Gemini, and GPT-3.5 often stop scoring without completing all tasks because they alternate between performing incorrect actions and waiting. These models struggle to correct their actions based on feedback after waiting, trapping them in infinite loops.

In conclusion, our findings indicate that as tasks become more complex, the models struggle to complete them and often fail to recognize opportunities for parallel processing. This reveals that language agents still have significant room for improvement when completing multiple tasks in dynamic environments, highlighting an area for future research.

Citation

@article{zhang2024timearena,

title={TimeArena: Shaping Efficient Multitasking Language Agents in a Time-Aware Simulation},

author={Zhang, Yikai and Yuan, Siyu and Hu, Caiyu and Richardson, Kyle and Xiao, Yanghua and Chen, Jiangjie},

journal={arXiv preprint arXiv:2402.05733},

year={2024}

}